The state of Twitter: Mitt Romney and Indonesian Politics

It’s no secret that a lot of people use ScraperWiki to search the Twitter API or download their own timelines. Our “basic_twitter_scraper” is a great starting point for anyone interested in writing code that makes data do stuff across the web. Change a single line, and you instantly get hundreds of tweets that you can then map, graph or analyse further.

So, anyway, Tom and I decided it was about time to take a closer look at how you guys are using ScraperWiki to draw data from Twitter, and whether there’s anything we could do to make your lives easier in the process!

Getting under the hood at scraperwiki.com

As anybody who’s checkout out our source code will will know, we store a truck-load of information about each scraper and each run it’s ever made, in a MySQL database. Of 9727 scrapers that had run since the beginning of June, 601 accessed a twitter.com URL. (Our database only stores the first URL that each scraper accesses on any particular run, so it’s possible that there are scripts that accessed twitter but not as the first URL.)

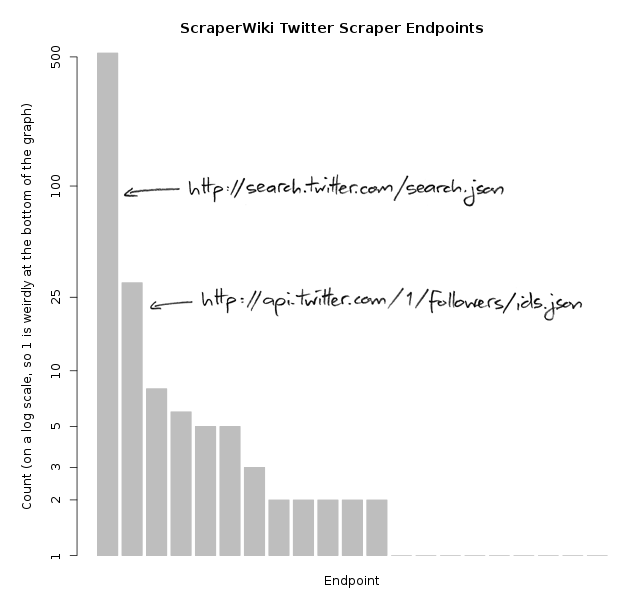

Twitter API endpoints

Getting more specific, these 601 scrapers accessed one of a number of Twitter’s endpoints, normally through the nominal API. We removed the querystring from each of the URLs and then looked for commonly accessed endpoints.

It turns out that search.json is by far the most popular entry point for ScraperWiki users to get Twitter data – probably because it’s the method used by the basic_twitter_scraper that has proved so popular on scraperwiki.com. It takes a search term (like a username or a hashtag) and returns a list of tweets containing that term. Simple!

The next most popular endpoint – followers/ids.json – is a common way to find interesting user accounts to then scrape more details about. And, much to Tom’s amusement, the third endpoint, with 8 occurrences, was http://twitter.com/mittromney. We can’t quite tell whether that’s a good or bad sign for his 2012 candidacy, but if it makes any difference, only one solitary scraper searched for Barack Obama.

Searches

We also looked at what people were searching for. We found 398 search terms in the scrapers that accessed the twitter search endpoint, but only 45 of these terms were called in more than one scraper. Some of the more popular ones were “#ddj” (7 scrapers), “occupy” (3 scrapers), “eurovision” (3 scrapers) and, weirdly, an empty string (5 scrapers).

Even though each particular search term was only accessed a few times, we were able to classify the search terms into broad groups. We sampled from the scrapers who accessed the twitter search endpoint and manually categorized them into categories that seemed reasonable. We took one sample to come up with mutually exclusive categories and another to estimate the number of scrapers in each category.

A bunch of scripts made searches for people or for occupy shenanigans. We estimate that these people- and occupy-focussed queries together account for between two- and four-fifths of the searches in total.

We also invented a some smaller categories that seemed to account for few scrapers each – like global warming, developer and journalism events, towns and cities, and Indonesian politics (!?) – But really it doesn’t seem like there’s any major pattern beyond the people and occupy scripts.

Family Tree

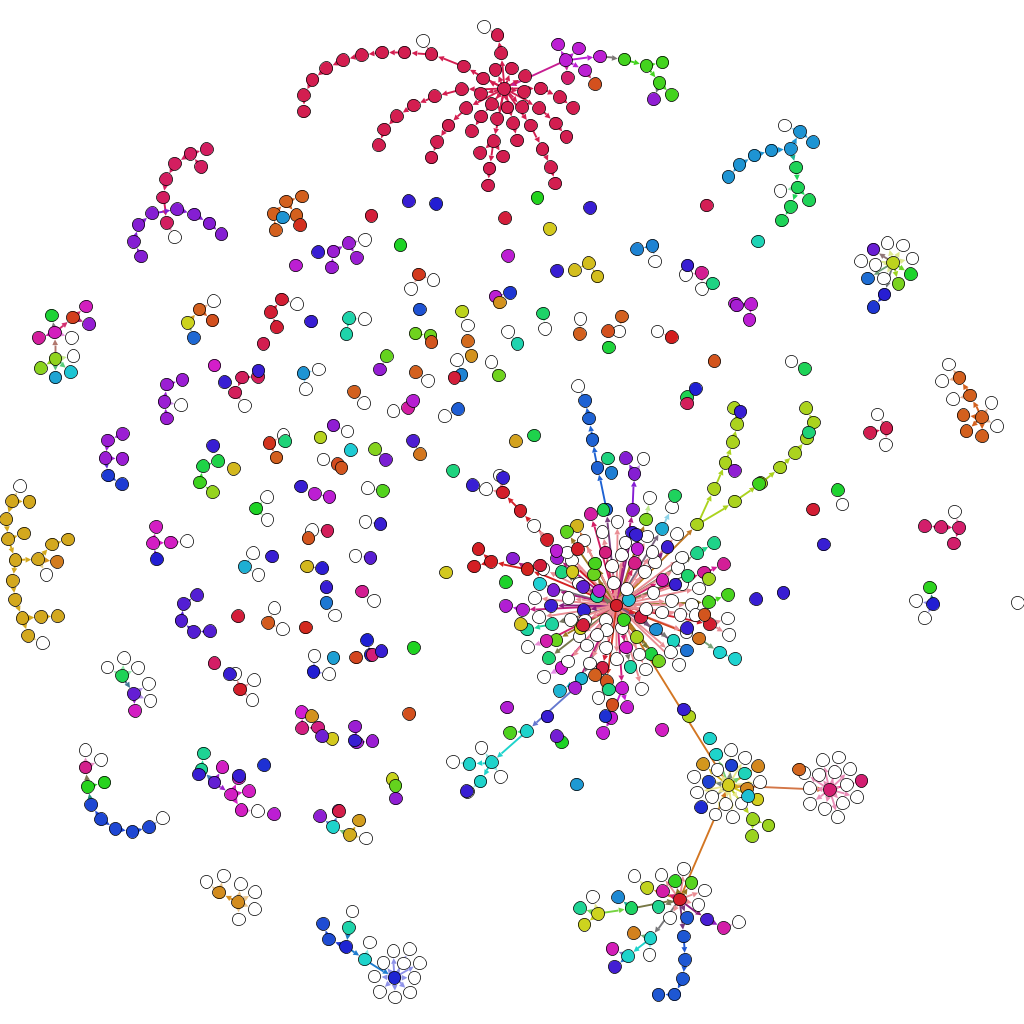

Speaking of the basic_twitter_scraper, we thought it would also be cool to dig into the family history of a few of these scrapers. When you see a scraper you like on ScraperWiki, you can copy it, and that relationship is recorded in our database.

Lots of people copy the basic_twitter_scraper in this way, and then just change one line to make it search for a different term. With that in mind, we’ve been thinking that we could probably make some better tweet-downloading tool to replace this script, but we don’t really know what it would look like. Maybe the users who’ve already copied basic_twitter_scraper_2 would have some ideas…

After getting the scraper details and relationship data into the right format, we imported the whole lot into the open source network visualisation tool Gephi, to see how each scraper was connected to its peers.

By the way, we don’t really know what we did to make this network diagram because we did it a couple weeks ago, forgot what we did, didn’t write a script for it (Gephi is all point-and-click..) and haven’t managed to replicate our results. (Oops.) We noticed this because we repeated all of the analyses for this post with new data right before posting it and didn’t manage to come up with the sort of network diagram we had made a couple weeks ago. But the old one was prettier so we used that :-)

It doesn’t take long to notice basic_twitter_scraper_2’s cult following in the graph. In total, 264 scrapers are part of its extended family, with 190 of those being descendents, and 74 being various sorts of cousins – such as scrape10_twitter_scraper, which was a copy of basic_twitter_scraper_2’s grandparent, twitter_earthquake_history_scraper (the whole family tree, in case you’re wondering, started with twitterhistory-scraper, written by Pedro Markun in March 2011).

With the owners of all these basic_twitter_scraper(_2)’s identified, we dropped a few of them an email to find out what they’re using the data for and how we could make it easier for them to gather in the future.

It turns out that Anna Powell-Smith wrote the basic_twitter_scraper at a journalism conference and Nicola Hughes reused it for loads of ScraperWiki workshops and demonstrations as basic_twitter_scraper_2. But even that doesn’t fully explain the cult following because people still keep copying it. If you’re one of those very users, make sure to send us a reply – we’d love to hear from you!!

Explore

We’ve posted our code for this analysis on Github, along with a table of information about the 594 Twitter scrapers that aren’t in vaults (out of 601 total Twitter scrapers) in case you’re as puzzled as we are by our users’ interest in Twitter data

Now here’s video of a cat playing a keyboard.

Trackbacks/Pingbacks

[…] Using hosted data extraction platform ScraperWiki to scrape Twitter APIs. […]

[…] Using hosted data extraction platform ScraperWiki to scrape Twitter APIs. […]