ScraperWiki Datastore – The SQL.

Recently at ScraperWiki we replaced the old datastore, which was creaking under the load, with a new, lighter and faster solution – all your data is now stored in Sqlite tables as part of the move towards pluggable datastores. In addition to the new increase in performance, using Sqlite also provides some other benefits such as allowing us to transparently modify the schema and accessing the data using SQL via the ScraperWiki API or via the Sqlite View. If you don’t know SQL, or just need to try and remember the syntax there is a great SQL tutorial available at w3schools.com which might get you started.

Recently at ScraperWiki we replaced the old datastore, which was creaking under the load, with a new, lighter and faster solution – all your data is now stored in Sqlite tables as part of the move towards pluggable datastores. In addition to the new increase in performance, using Sqlite also provides some other benefits such as allowing us to transparently modify the schema and accessing the data using SQL via the ScraperWiki API or via the Sqlite View. If you don’t know SQL, or just need to try and remember the syntax there is a great SQL tutorial available at w3schools.com which might get you started.

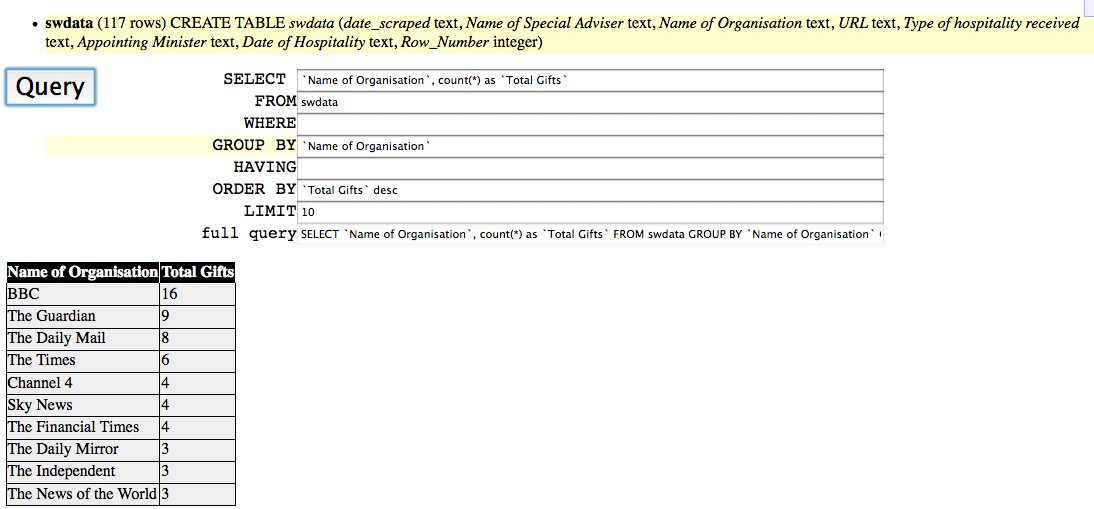

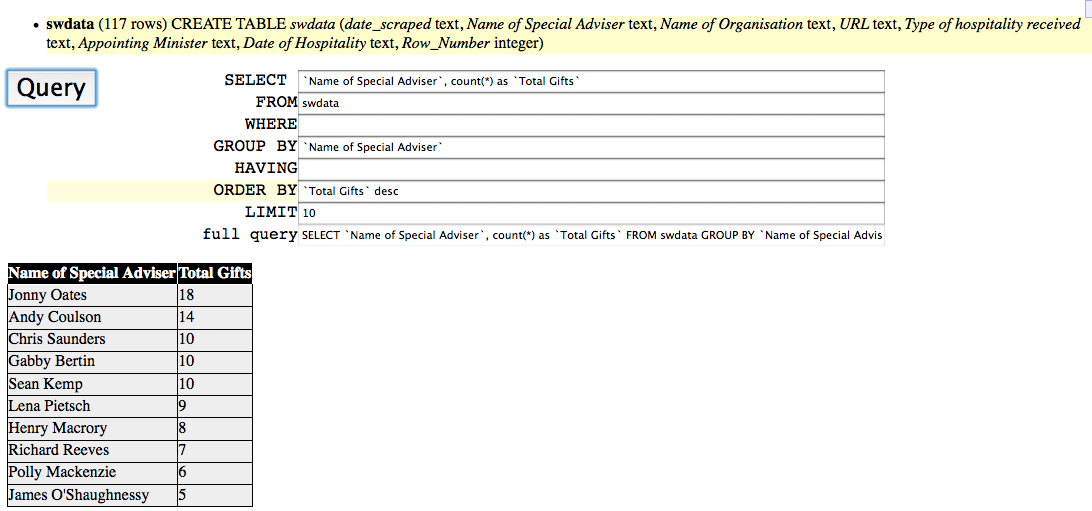

For getting your data out of ScraperWiki you can try using the Sqlite View, which makes it easier to add the fields you want to query as well as performing powerful queries on the data. To explain how you do this we’ll use the Scraper created by Nicola in her recent post Special Treatment for Special Advisers in No. 10 which you can access on ScraperWiki, and from there create a new view. If you choose General Sqlite View, you’ll get a nice easy interface to query and study the data. This dataset shows data from the Cabinet Office (UK central Government) and logs gifts given to advisers for the top ministers – all retrieved by Nicola after only having known how to program for three weeks.

If you’re more confident with your SQL, you can access a more direct interface after clicking the ‘Explore with ScraperWiki API’ link on the overview page for any scraper. This will also give you a link that you can use elsewhere to get direct access to your data in JSON or CSV format. For those that are still learning SQL, or not quite as confident as they’d like to be, using the Sqlite View is a good place to start. When you first get to the Sqlite View you’ll see something similar to the following, but without the data already shown.

As you can see, the view gives you a description of the fields in the Sqlite table (highlighted in yellow) and a set of fields where you can enter the information you require. If you are feeling particularly lazy you can simply click on the highlighted column names and they will be added to the SELECT field for you! Accessing data across scrapers is done slightly differently, and is hopefully the subject of a future post. By default this view will display the output data as a table but you can change it to do what you wish by editing the HTML and Javascript underneath – it is pretty straight forward. Once you have added the fields you wish to find (making sure to use ` to surround any field names with spaces in) clicking the query button will make a request to the ScraperWiki API and display the results on your page. It also shows you the full query so that you can copy your query and save it away for future use.

Now that you have an interface where you can modify your SQL, you can now access your data almost any way you want it! You can do simple queries by just leaving the SELECT field set to * which will return all of the columns, or you can specify the individual columns and the order they will be retrieved. You can even set their title by using the AS keyword. Setting the SELECT field to “`Name of Organisation` AS Organisation” allows will show that field with the new shorter column name.

Aside from ordering your results (putting a field name in ORDER BY, followed by desc if you want descending order), limiting your results (adding the number of records into LIMIT) and the aforementioned renaming of columns, one thing the Sqlite will let you do is group your results to show information that isn’t immediately visible in the full result set. Using the Special Advisers scraper again, the following view shows how by grouping the data on `Name of Organisation` and using the count function in the SELECT field we can show the total number of gifts given by each organisation – surely a lot faster than counting how many times each Organisation appears in the full output!

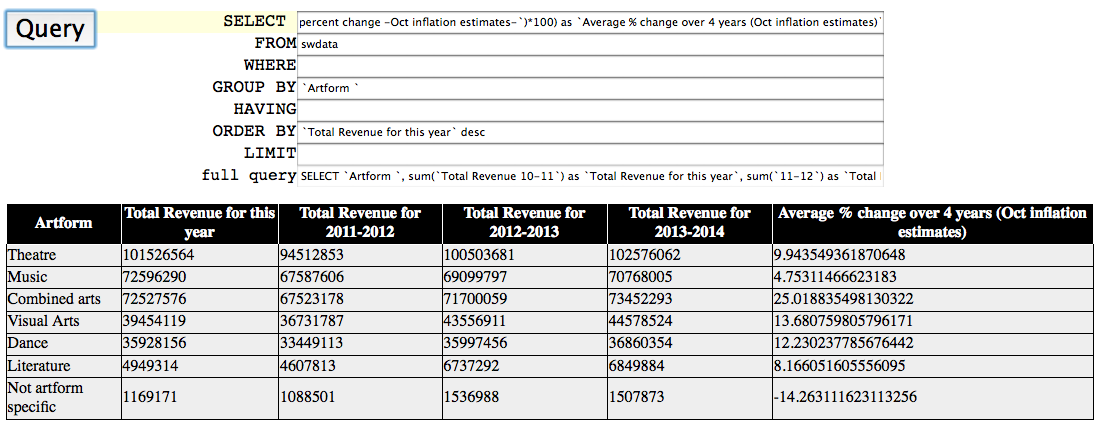

In addition to using the count function in SELECT you could also use sum, or even avg to obtain an average of some numerical values. Not only can you add these individual functions into your SELECT field, you can get a lot more complicated to get a better overall view of the data, as in the Arts Council Cuts scraper. Here you can see the output for the total revenue per year and average percent change by artform and draw your own conclusions on where the cuts are, or are not happening.

SELECT `Artform `,

sum(`Total Revenue 10-11`) as `Total Revenue for this year`,

sum(`11-12`) as `Total Revenue for 2011-2012`,

sum(`12-13`) as `Total Revenue for 2012-2013`,

sum(`13-14`) as `Total Revenue for 2013-2014`,

(avg(`Real percent change -Oct inflation estimates-`)*100)

as `Average % change over 4 years (Oct inflation estimates)`

FROM swdata

GROUP BY `Artform `

ORDER BY `Total Revenue for this year` desc"

If there is anything you’d like to see added to any of these features, let us know either in the comments or via the website.

Is there a document or sample how to get data from another scraper (sqlite table) ? (to transfer data between tables on different scrapers) ?

On the cheat sheet there is a sample

# Get Data and change table name

scraperwiki.sqlite.attach(“new_americ_foundation_drone_strikes”, “src”)

print scraperwiki.sqlite.table_info(“src.swdata”)

but how do I put the scraper/table name from the source table ?

The example before that shows how to do a basic select from the main swdata table:

scraperwiki.sqlite.attach(“new_americ_foundation_drone_strikes”)

print scraperwiki.sqlite.select(“* from new_americ_foundation_drone_strikes.swdata limit 2”)

There “new_americ_foundation_drone_strikes” is the id in the scraper’s URL. swdata is the name of the default table – you can change that to another table.

There’s an example in the Views tutorial:

http://scraperwiki.com/docs/python/python_view_guide/